Educational Workshop on Responsible AI for Peace and Security (UNODA)

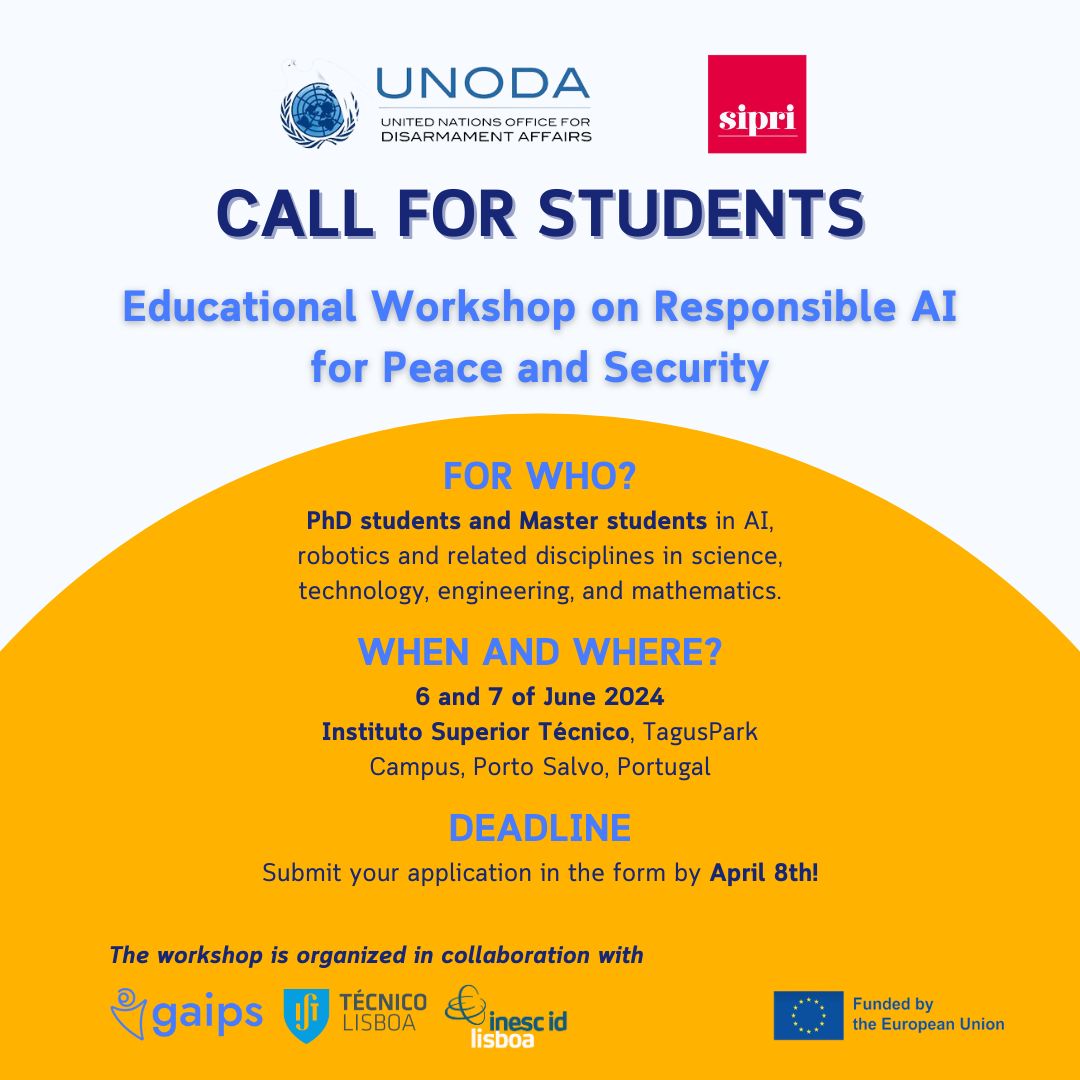

On June 6 and 7, The United Nations Office for Disarmament Affairs (UNODA) and the Stockholm International Peace Research Institute (SIPRI) are offering a selected group of technical students the opportunity to join a 2-day educational workshop on Responsible AI for peace and security.

The third workshop in the series will be held in Porto Salvo, Portugal, in collaboration with GAIPS, INESC-ID, and Instituto Superior Técnico. The workshop is open to students affiliated with universities in Europe, Central and South America, the Middle East and Africa, Oceania, and Asia.

Date & Time: June 6 a 7

Where: IST – Oeiras, Porto Salvo

Registration deadline: May 29 (here)

Summary: “As with the impacts of Artificial intelligence (AI) on people’s day-to-day lives, the impacts for international peace and security include wide-ranging and significant opportunities and challenges. AI can help achieve the UN Sustainable Development Goals, but its dual-use nature means that peaceful applications can also be misused for harmful purposes such as political disinformation, cyberattacks, terrorism, or military operations. Meanwhile, those researching and developing AI in the civilian sector remain too often unaware of the risks that the misuse of civilian AI technology may pose to international peace and security and unsure about the role they can play in addressing them. Against this background, UNODA and SIPRI launched, in 2023, a three-year educational initiative on Promoting Responsible Innovation in AI for Peace and Security. The initiative, which is supported by the Council of the European Union, aims to support greater engagement of the civilian AI community in mitigating the unintended consequences of civilian AI research and innovation for peace and security. As part of that initiative, SIPRI and UNODA are organising a series of capacity building workshops for STEM students (at PhD and Master levels). These workshops aim to provide the opportunity for up-and-coming AI practitioners to work together and with experts to learn about a) how peaceful AI research and innovation may generate risks for international peace and security; b) how they could help prevent or mitigate those risks through responsible research and innovation; c) how they could support the promotion of responsible AI for peace and security.”